The Best ETL Tools for PostgreSQL in 2026: A Decision Framework

Definite Team

If you Google "best ETL tools for PostgreSQL" in 2026, most of the top results recommend tools that no longer exist and if they do, many are on life support.

Blendo — acquired by RudderStack. Stitch — deprioritized under Qlik after the Talend acquisition. Xplenty — folded into Integrate.io. Meltano — acquired by Matatika.

The Postgres ETL landscape looks nothing like it did five years ago. The whole paradigm shifted: ETL (transform before loading) gave way to ELT (load first, transform in the warehouse). Open-source tools like Airbyte and dlt didn't even exist when those guides were written. AI-powered analytics wasn't a category. And the survivors are consolidating fast — Fivetran merged with dbt Labs, then acquired Census for reverse ETL and Tobiko Data (SQLMesh) for advanced transformations, racing to become an end-to-end platform.

The right Postgres ETL tool in 2026 depends entirely on who you are and what you're optimizing for. This guide is organized by that — not by alphabet.

| Tool | Type | Postgres Support | Best For | Pricing |

|---|---|---|---|---|

| Definite | All-in-one platform | Source | Startups & SMBs wanting insights fast | ~$1,000/mo flat for a complete data platform |

| Fivetran | Managed ELT | Source & destination | Teams with existing warehouses | MAR-based (consumption) |

| Airbyte | Open-source / Cloud ELT | Source & destination | DIY teams wanting flexibility | Free (OSS) or credits-based |

| dlt | Python EL library | Source & destination | Python devs, LLM-assisted pipelines | Free (open source) |

| dbt | Transformation layer | Runs against Postgres | Any ELT team needing governed transforms | Free (Core) or Cloud tiers |

| PeerDB | CDC replication | Source → ClickHouse | Postgres-to-ClickHouse analytics | Free (open source) |

| pglogical | Native replication | Postgres ↔ Postgres/Kafka | Enterprise Postgres shops | Free (extension) |

| Singer / Meltano | DIY scripting | Source & destination | Non-standard sources, max control | Free (open source) |

If You Want Answers Tomorrow, Not Next Quarter

For startups and SMBs, the real cost of ETL isn't the tool — it's the time, complexity, and engineering burden of assembling a multi-tool pipeline. You need an ETL/ELT tool, a data warehouse, a BI platform, maybe a semantic layer, maybe dbt for transformations — and someone to maintain all of it.

By the time you've stitched that together, you've spent weeks (or months) and thousands of dollars — before answering a single business question.

That's the problem all-in-one platforms solve.

Definite

Definite is a complete data platform that replaces the fragmented stack entirely. Instead of buying Fivetran + Snowflake + Looker + dbt and gluing them together, you get:

- 500+ pre-built connectors — including Postgres as a source, Stripe, HubSpot, Salesforce, and every other tool your startup runs on

- Built-in managed warehouse — powered by DuckDB's columnar engine, no Snowflake or BigQuery bill required

- Governed semantic layer — consistent metrics (ARR, churn, NRR) enforced across your org via Cube.dev

- AI analyst (Fi) — ask questions in plain English, get instant answers, no SQL required

- Dashboards and reporting — drag-and-drop visualization, Slack alerts, Google Sheets updates

Setup takes under 30 minutes. No data engineer required. No separate vendors to negotiate.

If your Postgres database is a source of data (not your analytics warehouse), Definite can ingest it alongside every other tool your business runs — without a separate ETL tool in the picture.

Should you use Postgres as your data warehouse? We wrote an entire post on this: We Love Postgres. We'd Never Use It as a Data Warehouse. The short version: Postgres is phenomenal for OLTP. For analytical workloads, purpose-built columnar engines are 10-100x faster.

Best for: Startups and SMBs that need insights fast, don't have a data engineer, and want one platform instead of four.

If You Want Managed Pipelines Without Building Everything

If you already have a data warehouse (Snowflake, BigQuery, Redshift) and a BI tool, you may just need a reliable way to move data from Postgres to that warehouse. That's where managed ELT platforms come in.

Fivetran

Fivetran is the market leader in managed ELT. Its Postgres connector uses Change Data Capture (CDC) via logical replication, meaning it reads your write-ahead log instead of querying your production database. Updates stream in near real-time with minimal load on your source.

- 500+ connectors, fully managed and maintained

- Automatic schema drift handling — Fivetran adapts when your source tables change

- Pre-built analytics-ready data models for common sources

- Recently merged with dbt Labs, combining ingestion and transformation under one company

The caveat: Fivetran uses Monthly Active Rows (MAR) pricing. A sudden spike in source data or a high-volume connector can significantly increase your bill. And Fivetran is just the ingestion layer — you still need a warehouse ($200-500/month), a BI tool ($200-500/month), and potentially a semantic layer. The full stack cost adds up fast.

Best for: Data teams with an existing warehouse and budget for consumption-based pricing who want reliable, zero-maintenance data movement at scale.

Airbyte Cloud

Airbyte started as an open-source project and has grown into one of the two dominant ELT platforms. Airbyte Cloud is the managed version — you get the same connector catalog without running infrastructure.

- 350+ connectors with strong Postgres source and destination support

- Credits-based pricing (more transparent than MAR, but still usage-dependent)

- Growing community, active connector development

- Option to start on Cloud and migrate to self-hosted later if costs matter

The caveat: Same as Fivetran — Airbyte Cloud moves data but doesn't store or visualize it. You're still assembling a multi-tool stack.

Best for: Teams that want managed ELT with the flexibility to self-host later, and who are comfortable building the rest of the stack.

A Note on Stitch

You'll still see Stitch recommended in older guides. Stitch was acquired by Talend in 2018, Talend was acquired by Qlik in 2023, and Stitch has been progressively deprioritized since. Its free tier was eliminated, feature investment has stalled, and many users have migrated to Fivetran or Airbyte. If you're evaluating tools today, look elsewhere.

If You Have Engineers and Want Control

Some teams want — or need — to own their data pipelines. Maybe you have non-standard sources, strict compliance requirements, or simply prefer code over configuration. Here's what the code-first landscape looks like in 2026.

dlt (data load tool)

dlt is a Python-first, lightweight ELT library that's become the fastest-growing open-source data loading tool. It's designed for Python developers who want to write pipelines as code without the overhead of a platform.

import dlt

pipeline = dlt.pipeline(

pipeline_name="pg_pipeline",

destination="duckdb",

dataset_name="my_data",

)

pipeline.run(my_postgres_data)

pip install dltand go — no backends, no containers, no orchestration platform required- Works inside Jupyter notebooks, Cursor, and any AI code editor

- 8,800+ supported sources — many generated via LLM-assisted pipeline building

- 3M+ PyPI downloads, 6,000+ companies in production

- Backed by Bessemer Venture Partners

dlt is purpose-built for the era of AI-assisted development. You can describe a data source to an LLM, and it can scaffold a working dlt pipeline. That's a fundamentally different workflow than configuring connectors in a web UI.

Best for: Python-savvy teams who want to write pipelines as code, especially those leveraging LLMs for development.

Airbyte OSS (Self-Hosted)

Airbyte's open-source version gives you the same connector catalog as Airbyte Cloud but running on your own infrastructure.

- Full control over data — nothing leaves your network

- Free forever (you pay for infrastructure, not licenses)

- Same 350+ connectors

- Requires Docker or Kubernetes and ongoing ops maintenance

The trade-off: "Free" means free of license cost, not free of engineering time. You'll need someone to handle deployment, upgrades, monitoring, and scaling. For teams with DevOps capacity, it's a strong choice. For teams without, the hidden cost is real.

Best for: Engineering teams with DevOps capacity who want open-source flexibility and full data control.

dbt (data build tool)

dbt isn't an extraction tool — it's the transformation standard. Any modern Postgres ELT discussion has to mention it because dbt handles the T that extraction tools leave behind.

- Version-controlled SQL transformations

- Testing, documentation, and lineage built in

- Runs against your warehouse (Postgres, Snowflake, BigQuery, DuckDB)

- Recently merged with Fivetran — the implications of that consolidation are still unfolding

If you're using Fivetran, Airbyte, or dlt to load raw data into a warehouse, dbt is likely how you'll transform it into useful metrics and models.

Best for: Any team doing ELT that needs governed, version-controlled transformations. Essential middleware, not a standalone solution.

Target Postgres / Singer / Meltano

The Singer specification (originally from Stitch) defined a standard for ETL scripting: taps extract data, targets load it. Target Postgres from datamill-co was built as a Singer target for loading data into PostgreSQL.

The Singer ecosystem is still alive, but active development has largely migrated to Meltano (originally from GitLab, now independent). Meltano provides a modern CLI, orchestration, and managed Cloud offering on top of Singer taps and targets.

- Maximum flexibility — write custom taps for any source

- Maximum responsibility — you own the code, the orchestration, and the maintenance

- Meltano adds structure and deployability to what was previously a DIY scripting ecosystem

Best for: Teams with highly custom or non-standard data sources who are comfortable writing and maintaining pipeline code.

If You Need Specialized Replication

Some use cases don't need a general-purpose ETL platform — they need purpose-built replication between specific systems.

pglogical

pglogical is an open-source PostgreSQL extension for logical replication. Originally from 2ndQuadrant (now maintained by EDB after their 2020 acquisition), it enables:

- Postgres-to-Postgres replication with row and column filtering

- Streaming to Kafka and RabbitMQ subscribers

- Automatic replication of schema changes

- Partition-aware replication

pglogical isn't a general ETL tool — it's a replication layer for Postgres-native architectures. If you're running multiple Postgres instances and need to keep them in sync, or you need to stream Postgres changes into a message broker, pglogical is purpose-built for that.

Best for: Enterprise Postgres shops that need real-time replication between Postgres instances or into streaming systems like Kafka.

PeerDB

PeerDB is purpose-built for Postgres-to-ClickHouse CDC replication. If your analytics engine is ClickHouse (increasingly popular for real-time analytics at scale), PeerDB handles the bridge:

- Uses Postgres logical decoding for real-time Change Data Capture

- Optimized specifically for the Postgres → ClickHouse pipeline

- Handles schema mapping, type conversion, and incremental syncing

- Low-latency replication designed for analytics workloads

Best for: Teams running ClickHouse as their analytics engine who need fast, reliable Postgres replication without building custom CDC pipelines.

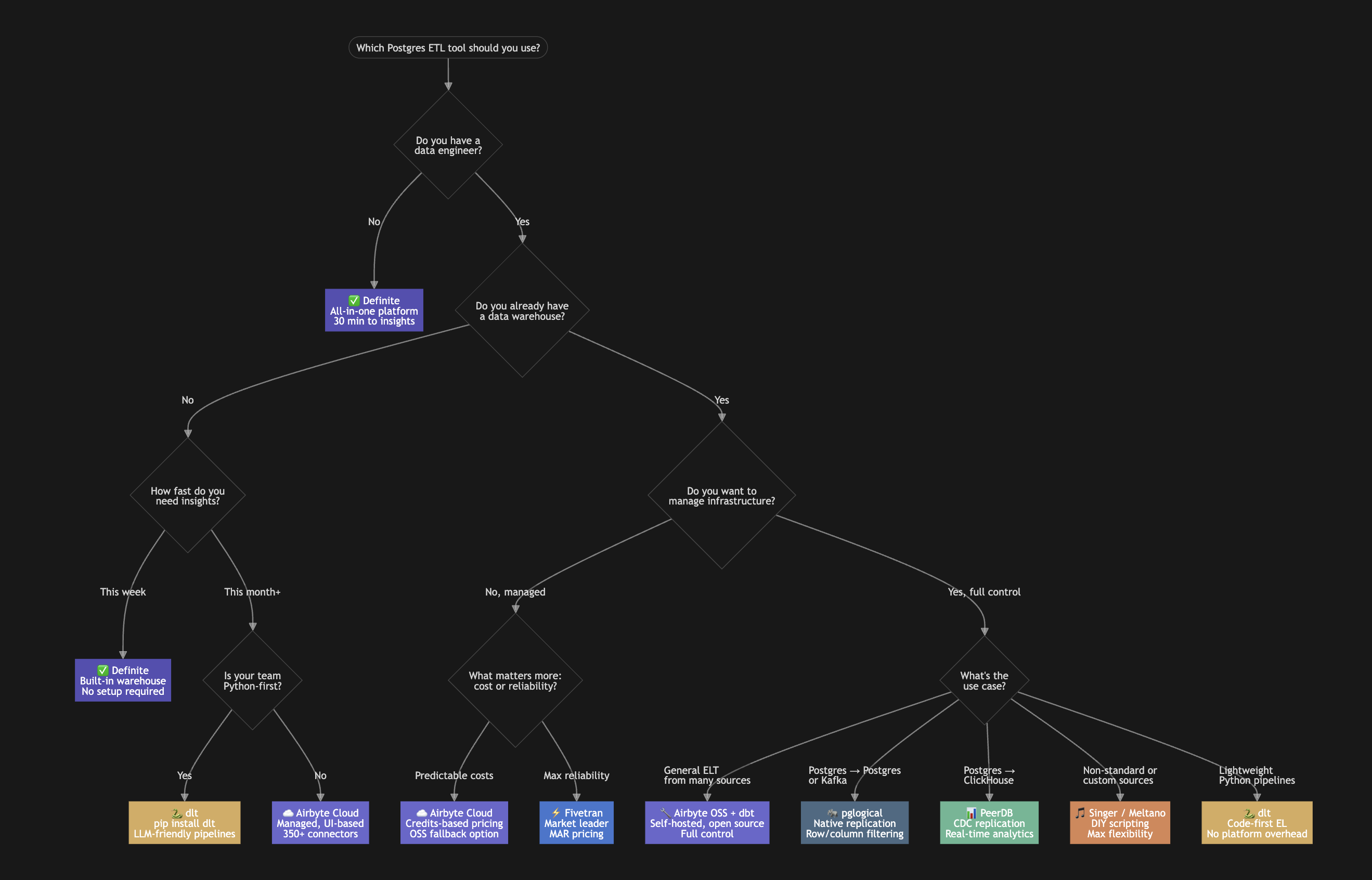

How to Choose: The Decision Framework

The Postgres ETL landscape has consolidated dramatically. In 2019, you had 11+ point tools to evaluate. In 2026, the real question is how much infrastructure you want to own.

| Your Situation | Best Starting Point | Time to First Insight |

|---|---|---|

| Startup, need insights fast, no data engineer | Definite | 30 minutes |

| Have a warehouse, want managed no-code pipelines | Fivetran or Airbyte Cloud | Days to weeks |

| Python team, want lightweight code-first EL | dlt | Hours to days |

| Eng team, want full open-source control | Airbyte OSS + dbt | Days to weeks |

| Need Postgres → Postgres replication | pglogical | Hours |

| Need Postgres → ClickHouse | PeerDB | Hours |

| Maximum DIY, non-standard sources | Singer/Meltano + Target Postgres | Days |

The pattern is clear: the less infrastructure you want to manage, the faster you get to insight — but the more you pay in platform fees. The more control you want, the more engineering time you invest.

For most startups and SMBs, the math favors the all-in-one approach. The "free" open-source path often costs more in engineering time than a managed platform. But for engineering-heavy organizations with specific requirements, the code-first and specialized tools are genuinely excellent — and better than they've ever been.

The Definite Advantage

If you've read this far and thought "I just want to connect my Postgres database and start getting answers" — that's exactly what Definite is built for.

Unified: All your data in one place. 500+ connectors bring product, ops, and SaaS data together — including your Postgres database. No separate ETL tools, no separate warehouse, no separate BI platform.

Simple: True self-service. The Canvas works like a spreadsheet. The governed semantic layer ensures everyone sees the same numbers. Your team doesn't need SQL expertise or data engineering skills.

AI-Powered: Ask "What's driving churn this month?" in plain English and get an instant answer. Fi, Definite's AI analyst, summarizes trends, finds anomalies, and automates reports — no prompt engineering required.

Open: Built on open standards — DuckDB, Iceberg/Parquet, Cube.dev. Export your data and queries anytime. No vendor lock-in.

Get started with Definite — 30 minutes from signup to Postgres analytics. Or request a demo to see how it compares to building your own Postgres ETL pipeline.