Snowflake Alternatives for Startups: Which Data Warehouse Should You Actually Use?

Mike Ritchie

If you're leading a startup looking at data warehouses, you've probably seen Snowflake come up everywhere. But you've also seen the horror stories: unpredictable bills, costs way higher than expected, and a perceived lack of ROI.

So what are your options?

Summarize and analyze this article with:

|  |  |  |  |

|---|

Table of Contents

- Quick Comparison

- Why People Look for Snowflake Alternatives

- How to Choose a Data Platform: 5 Things That Actually Matter

- Google BigQuery

- Amazon Redshift

- Databricks

- The Real Problem

- A Simpler Alternative for Startups

- When to Use Each Option

Quick Comparison

| Platform | Architecture | Cloud Lock-in | Pricing Model | Best For |

|---|---|---|---|---|

| Snowflake | Cloud warehouse | Multi-cloud | Consumption-based | SQL analytics |

| BigQuery | Serverless | Google Cloud only | Pay-per-query | Ad-hoc analysis |

| Redshift | Cluster-based | AWS only | Reserved capacity | AWS workloads |

| Databricks | Lakehouse | Multi-cloud | DBU-based | ML, data engineering |

| Definite | All-in-one | N/A | Flat monthly | Startups |

Why People Look for Snowflake Alternatives

Snowflake is genuinely powerful. It separates storage and compute, scales automatically, and handles complex queries well. But the pricing is consumption-based, and that can get unpredictable fast.

Companies routinely see bills 200 to 300% higher than they expected. Instacart, for example, reportedly spent over $50 million a year on Snowflake.

For a startup, that unpredictability is a real problem.

Snowflake has expanded beyond warehousing with Snowpark, Cortex for AI, and ML capabilities. But the core challenge remains: Snowflake was architected for enterprise operations, not lean startup teams. It demands data engineers, ETL pipelines, BI tools, and extensive setup.

Let's look at the alternatives.

How to Choose a Data Platform: 5 Things That Actually Matter

Before diving into specific platforms, here's what actually matters when evaluating data infrastructure for a startup:

| Criterion | Why It Matters | What to Look For |

|---|---|---|

| Time-to-Value | Startups need answers now, not in 3 months | Dashboard creation in hours, not weeks |

| Engineering Overhead | Data engineers cost $150K+/year | Self-serve capabilities, minimal technical staff |

| Cost Predictability | Runway matters | Fixed pricing, no surprise bills |

| Complexity | More tools = more maintenance | Unified platform vs. stitched-together stack |

| Startup Alignment | Enterprise tools serve enterprise needs | Purpose-built for fast-moving teams |

Keep these criteria in mind as we evaluate each platform.

Google BigQuery

BigQuery is Google's serverless data warehouse. The big selling point is zero infrastructure management. You don't provision clusters, you don't manage nodes. You just run queries.

How BigQuery Pricing Works

BigQuery uses a pay-per-query model. You pay for the data you scan, not for compute time. For ad-hoc analysis and variable workloads, this can save a lot of money. But if you're running a lot of queries, costs add up fast.

Where BigQuery Shines

If you're already on Google Cloud, the integration is seamless. BigQuery handles petabyte-scale data, supports real-time streaming, and has built-in machine learning features.

BigQuery has also added BigQuery ML for training models with SQL, Vertex AI integration, and Dataform for transformations.

BigQuery Limitations

You're locked into Google Cloud. BigQuery Omni offers some cross-cloud querying, but it's still limited. And like Snowflake, you still need ETL tools and BI platforms on top.

| Strengths | Limitations |

|---|---|

| Serverless, zero infrastructure | Google Cloud only |

| Pay-per-query pricing | Costs add up with heavy querying |

| Petabyte scale | Still need ETL and BI tools |

| Built-in ML | Complex pricing tiers |

Amazon Redshift

Redshift is AWS's data warehouse, and it's been around since 2013. It uses a cluster-based architecture with columnar storage, optimized for analytical queries.

The Redshift Advantage

If you're an AWS shop, everything integrates. S3, Lambda, Glue, SageMaker. It's all connected.

Redshift Serverless now offers pay-per-use options similar to BigQuery, giving you more flexibility.

Redshift Pricing

The pricing model is more predictable than Snowflake if you use reserved instances. You commit to capacity upfront and get a discount. For steady workloads, this can be cheaper.

Redshift Features

Redshift has added Redshift ML for SageMaker integration, Spectrum for querying S3 directly, and Serverless for pay-per-use.

But for the most part, it's still AWS only and doesn't have a lot of multi-cloud flexibility.

| Strengths | Limitations |

|---|---|

| AWS-native integration | AWS only |

| Predictable pricing (reserved) | Cluster management (unless Serverless) |

| Mature, battle-tested | Still need ETL and BI tools |

| Spectrum for S3 queries | Less flexible than multi-cloud options |

Databricks

Databricks is different from the others. It's not a traditional data warehouse. It's a data lakehouse.

What Is a Lakehouse?

A lakehouse combines the flexibility of a data lake with the performance of a data warehouse. Databricks runs on Apache Spark and supports structured, semi-structured, and unstructured data.

If you're doing heavy machine learning or data engineering, Databricks is built for that.

Databricks Multi-Cloud

Databricks is truly multi-cloud. It runs on AWS, Azure, and GCP. You're not locked into one provider.

Databricks Downsides

The downside: Databricks is complex. It's designed for data engineers and data scientists, not necessarily analysts. There's a steep learning curve.

And the price can get expensive fast. Many companies spend between $50,000 and $200,000 or more annually, even for moderate usage.

| Strengths | Limitations |

|---|---|

| Lakehouse architecture | Complex, steep learning curve |

| Multi-cloud | Expensive ($50K-$200K+/year) |

| Best for ML and streaming | Built for engineers, not analysts |

| Handles all data types | Overkill for simple analytics |

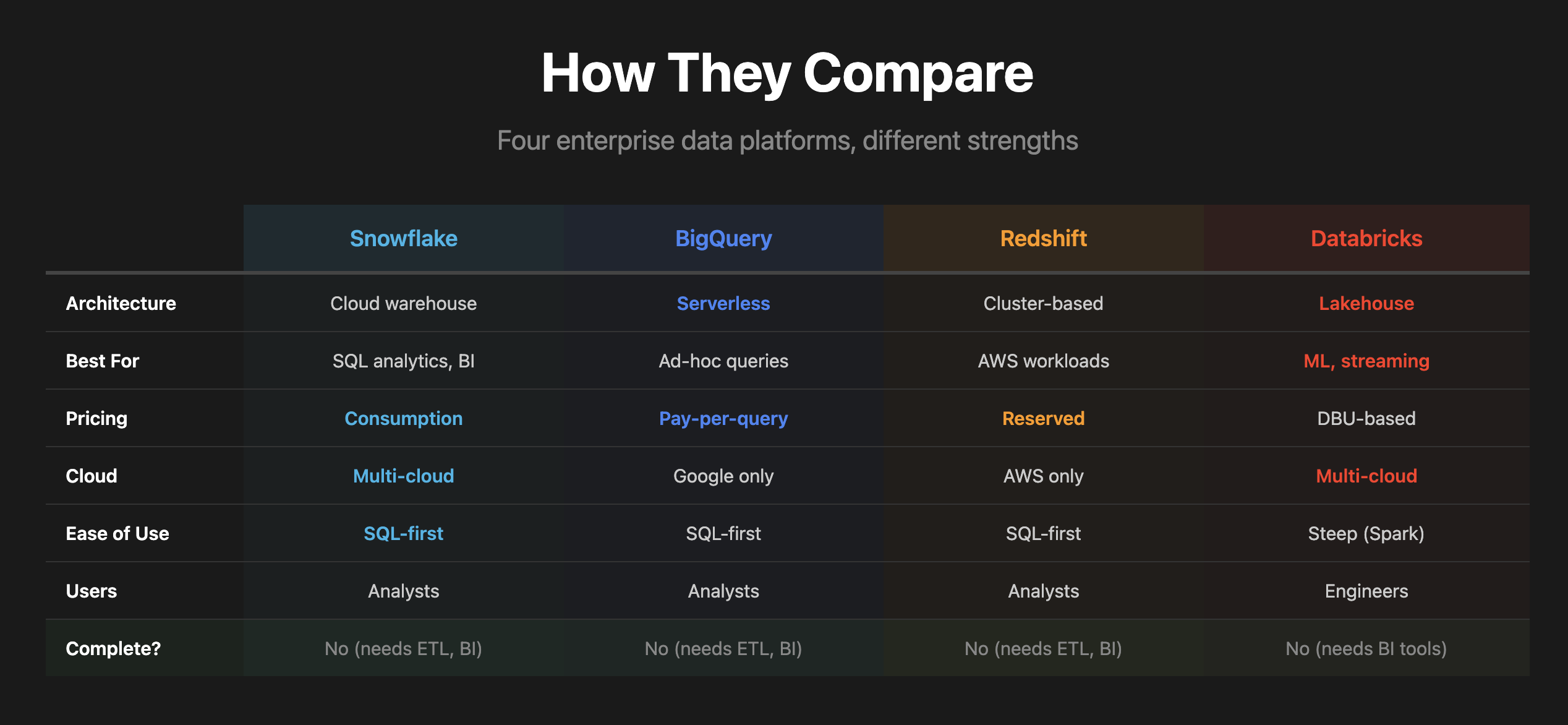

How They Compare

Here's how all four platforms stack up:

| Factor | Snowflake | BigQuery | Redshift | Databricks |

|---|---|---|---|---|

| Architecture | Cloud warehouse | Serverless | Cluster-based | Lakehouse |

| Best For | SQL analytics | Ad-hoc queries | AWS workloads | ML, data engineering |

| Pricing | Consumption | Pay-per-query | Reserved capacity | DBU-based |

| Cloud | Multi-cloud | Google only | AWS only | Multi-cloud |

| Ease of Use | SQL-first | SQL-first | SQL-first | Steep (Spark) |

| Primary Users | Analysts | Analysts | Analysts | Engineers |

All four have expanded their capabilities. Snowflake has Cortex. BigQuery has BigQuery ML. Redshift has Spectrum and ML. Databricks has a full lakehouse. They're all trying to become complete platforms.

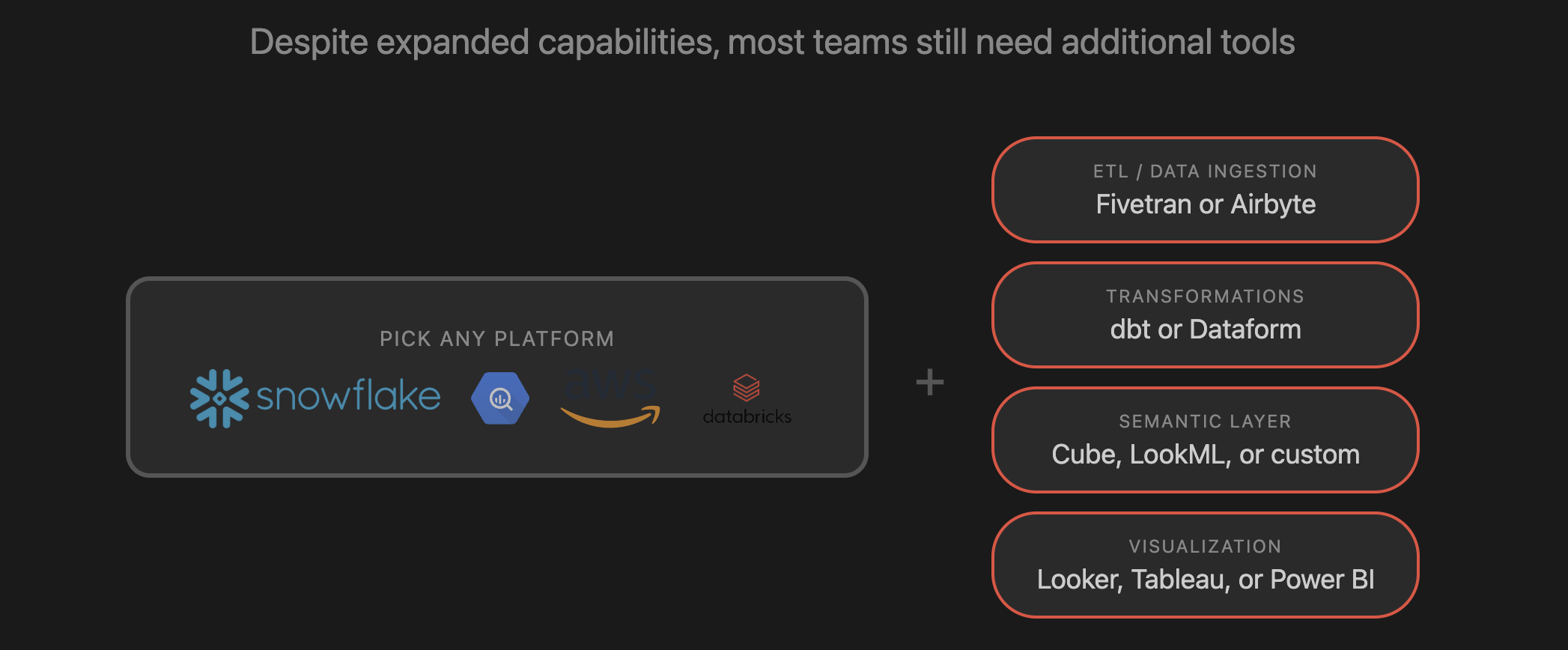

The Real Problem

Here's what nobody talks about. In practice, most companies still need to bolt on additional tools. This is the fragmentation problem that killed the modern data stack.

- ETL with Fivetran or Airbyte

- Transformations with dbt or Dataform

- Visualization with Looker, Tableau, or Power BI

The native capabilities exist in these data warehouses, but they're often not as mature or integrated as the specialized tools.

The True Cost of a Data Stack

That's four or five different products, four or five different bills, and probably a data engineer just to keep everything running. For most startups, that's massive overkill.

| Layer | Tool Examples | Typical Cost |

|---|---|---|

| Data Warehouse | Snowflake, BigQuery, Redshift | $2,000 - $10,000+/mo |

| ETL / Data Syncing | Fivetran, Airbyte | $500 - $2,000+/mo |

| Transformations | dbt Cloud, Dataform | $100 - $500/mo |

| BI / Dashboards | Looker, Tableau, Power BI | $1,000 - $5,000+/mo |

| Data Engineer | Salary | $10,000+/mo |

The total cost of a stack with Snowflake, BigQuery, or Redshift is easily around $5,000 to $25,000 a month. And it takes weeks or months to set up properly. (For a detailed breakdown at each growth stage, see our B2B SaaS data stack cost guide or use the warehouse cost estimator.)

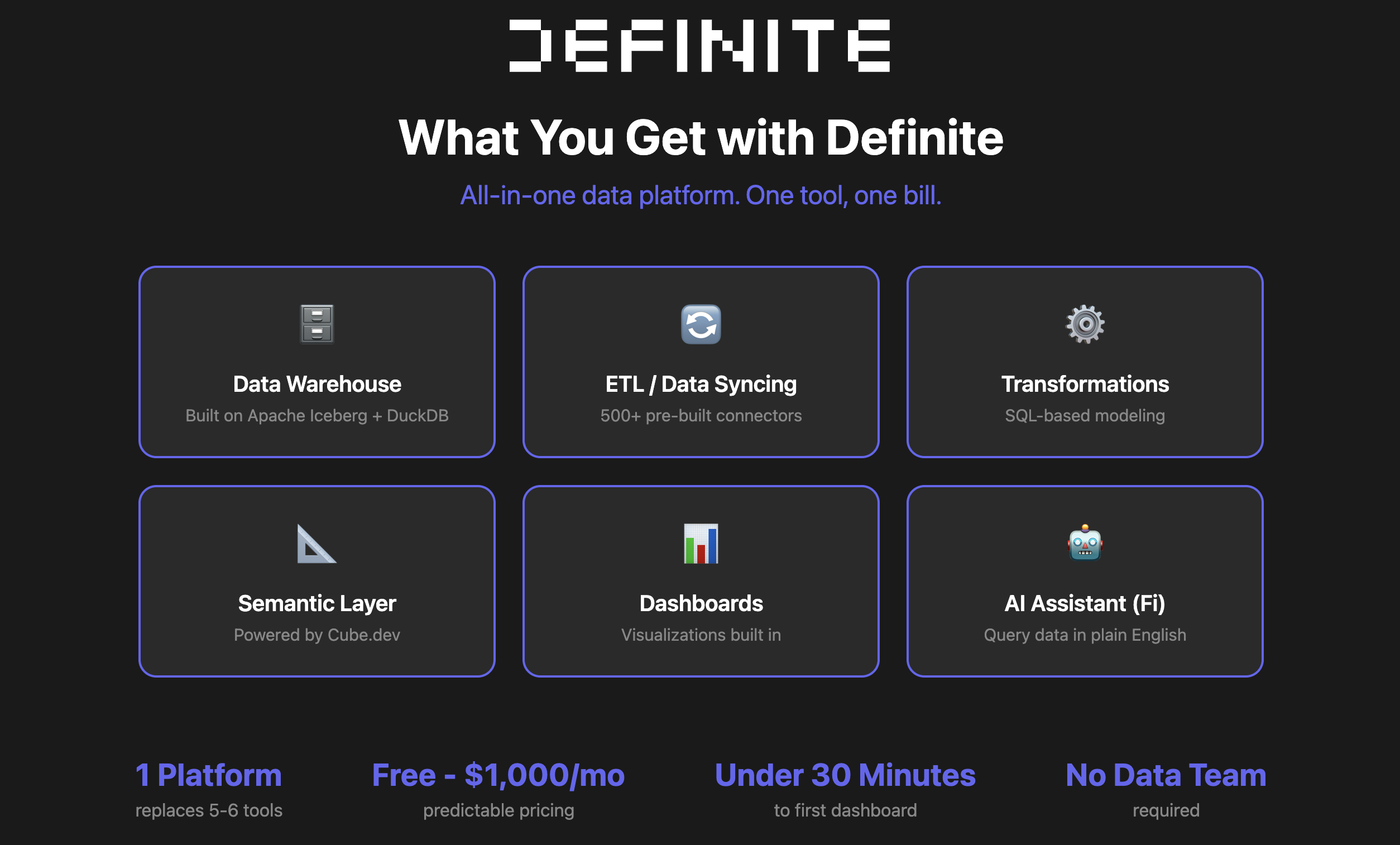

A Simpler Alternative for Startups

If you're a startup, you probably don't need any of these.

Definite is an all-in-one data platform: data warehouse, ETL, transformations, semantic layer, dashboards, and AI assistant. One platform, one bill.

You sign up, connect your data sources, and you're analyzing data in under 30 minutes. No Fivetran. No dbt. No data engineer required.

What Definite Includes

| Component | What It Does |

|---|---|

| Data Warehouse | Built on Apache Iceberg and DuckDB |

| ETL / Data Syncing | 500+ pre-built connectors |

| Transformations | SQL-based modeling |

| Semantic Layer | Powered by Cube.dev |

| Dashboards | Visualizations built in |

| AI Assistant | Fi for plain English queries |

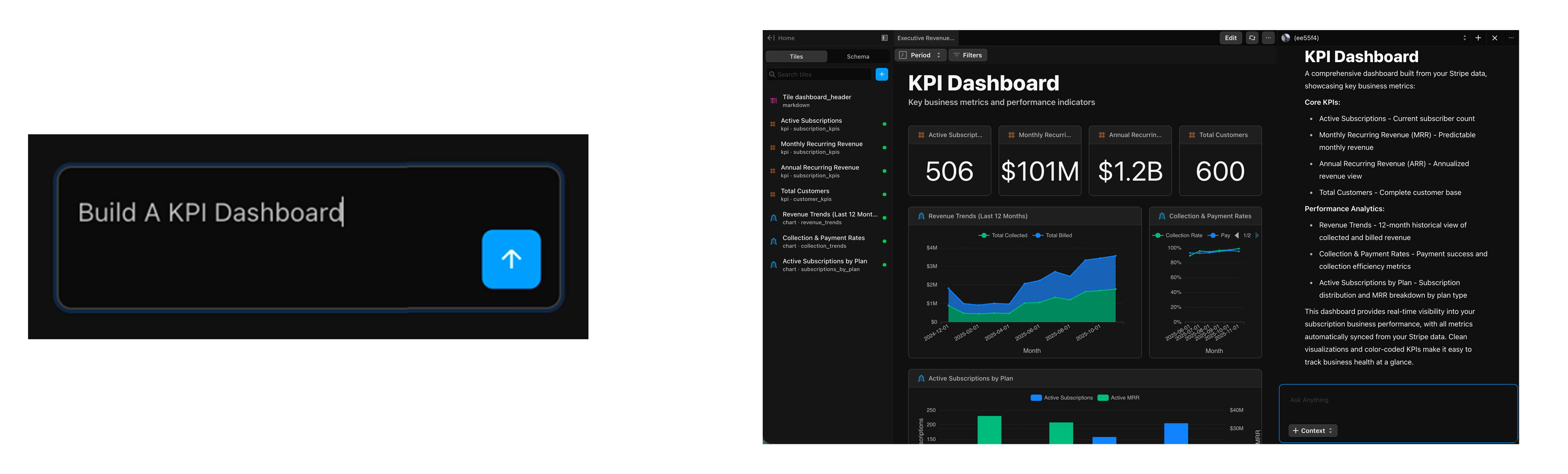

How It Works

-

Connect your data sources: Definite has over 500 pre-built connectors for tools like Stripe, HubSpot, Salesforce, Attio, Postgres, and more.

-

Create a dashboard: Start talking to Fi, the AI assistant. Ask questions like "What's our ARR by month? Build me a dashboard."

-

Fi handles the rest: Fi finds the data that best answers your question, builds data models in the background, writes the query, and creates the visualization.

-

Customize everything: Review the underlying SQL or change the design of the charts. You're in control.

That's it. From zero to dashboards in minutes, not months. A 40-person e-commerce team went from 15-second dashboard loads on their old Snowflake stack to sub-second queries on Definite. (For the full setup walkthrough, see our startup data stack guide.)

Why Definite Wins on the 5 Criteria

| Criterion | Enterprise Warehouses | Definite |

|---|---|---|

| Time-to-Value | Weeks to months | Under 30 minutes |

| Engineering Overhead | Requires data engineers | Self-serve, no technical staff |

| Cost Predictability | Consumption-based, hard to forecast | Fixed pricing, transparent |

| Complexity | Multiple tools, significant configuration | Unified platform |

| Startup Alignment | Enterprise-focused | Purpose-built for startups |

Teams typically get their first dashboard live within 30 minutes of signing up, and one Series A SaaS company cut their analytics spend from $2,400/month to $250/month after consolidating from a Snowflake-based stack onto Definite.

Built on Open Standards

Definite uses open-source technologies: DuckDB for fast in-memory analytics, Apache Iceberg for scalable storage, and Cube.dev for the semantic layer. Your data isn't locked in. Iceberg is natively supported by both Snowflake and Databricks if you ever need to migrate.

When to Use Each Option

Choose Snowflake, BigQuery, Redshift, or Databricks When:

- You have petabytes of data

- You have complex ML workloads

- You have a dedicated data engineering team

- You're managing complex legacy systems with extensive ecosystem integrations

Specific Recommendations:

- Choose BigQuery if you're on Google Cloud and want serverless

- Choose Redshift if you're on AWS and want predictable costs

- Choose Databricks if you're doing serious machine learning

Choose Definite When:

- You're a startup that needs analytics now

- You don't want to hire a data team

- You want predictable costs

- You prioritize simplicity over extensive features

Start simple. Get value fast. You can always migrate later if you need enterprise scale.

FAQ

Why is Snowflake pricing unpredictable?

Snowflake uses consumption-based pricing where you pay for compute credits based on query execution. This means costs vary with usage patterns, and complex queries or inefficient workloads can spike bills unexpectedly. Many companies report bills 200-300% higher than budgeted.

Is BigQuery cheaper than Snowflake?

It depends on your usage pattern. BigQuery's pay-per-query model works well for ad-hoc, variable workloads. For consistent, heavy querying, costs can exceed Snowflake. BigQuery charges $6.25 per TB scanned on-demand.

Can I use Databricks without data engineers?

Databricks is designed for data engineers and data scientists. While it has SQL capabilities, the platform assumes familiarity with Spark, notebooks, and cluster management. Most teams need engineering expertise to use it effectively.

What if I outgrow Definite?

Definite uses Apache Iceberg, an open table format that both Snowflake and Databricks natively support. Your SQL models and semantic layer definitions transfer. Starting lean doesn't mean locking yourself in.

Do I really need a data warehouse?

If you're a startup with data in multiple SaaS tools (Stripe, HubSpot, etc.) and want unified analytics, you need somewhere to centralize that data. The question is whether you need an enterprise warehouse or an all-in-one platform that handles everything.

How does Definite compare on security?

Definite delivers enterprise-grade security: encryption, multi-factor authentication, and role-based access control. These features are built in by default, without requiring specialized security personnel.

Who Is This For?

- Startup founders evaluating data warehouse options

- Data leaders comparing Snowflake alternatives

- Technical founders who want analytics without over-engineering

- Small teams overwhelmed by managing multiple data tools

- Anyone tired of paying $5,000+ a month for dashboards

If you have petabytes of data and a dedicated data engineering team, Snowflake, BigQuery, Redshift, or Databricks makes sense. But if you're a startup trying to make better decisions faster, you want something lean.

Get Started

Stop over-engineering. Get analytics running this afternoon.

Try Definite free and go from raw data to live dashboards in under 30 minutes.

- Read the docs: docs.definite.app

- See pricing: definite.app/pricing